Abstract

We study best-of-N for large language models (LLMs) where the selection is based on majority voting. In particular, we analyze the limit N → ∞, which we denote as Best-of-∞. While this approach achieves impressive performance in the limit, it requires an infinite test-time budget. To address this, we propose an adaptive generation scheme that selects N based on answer agreement, thereby efficiently allocating inference-time computation. Beyond adaptivity, we extend the framework to weighted ensembles of multiple LLMs, showing that such mixtures can outperform any individual model. The optimal ensemble weighting is formulated and efficiently computed as a mixed-integer linear program. Extensive experiments demonstrate the effectiveness of our approach.

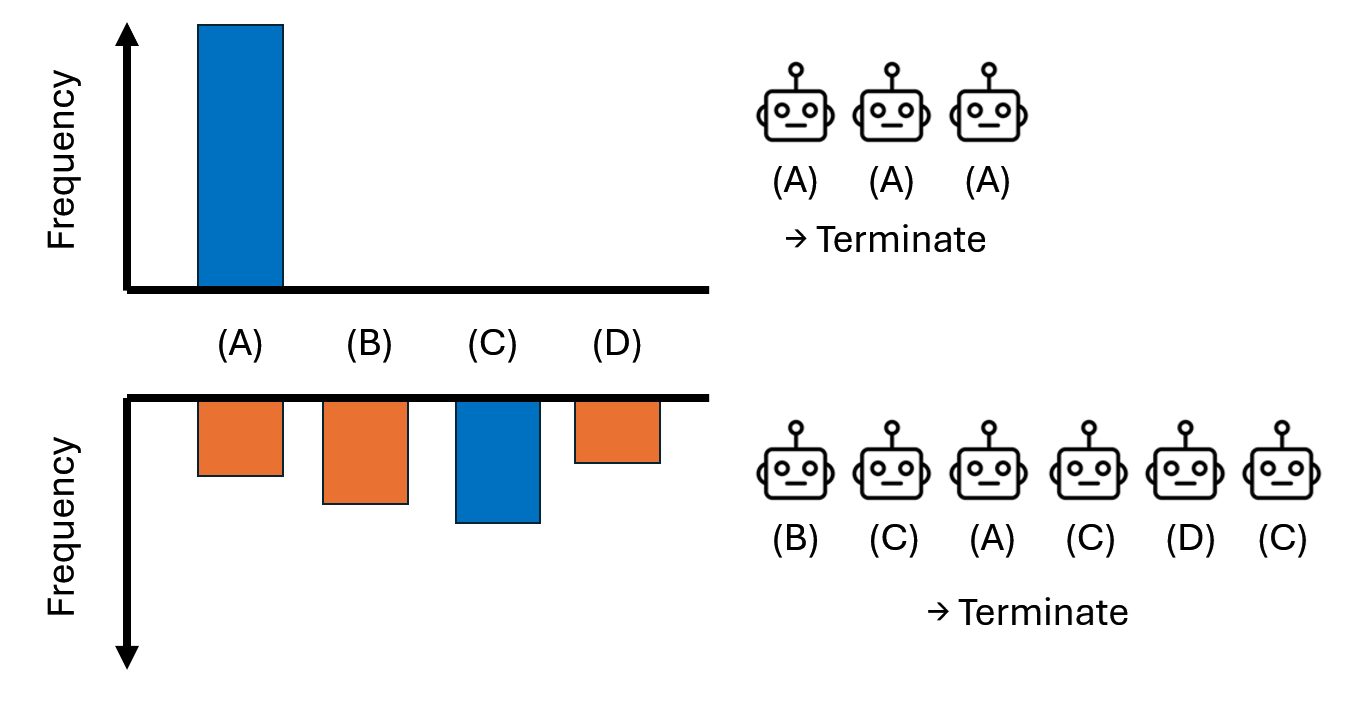

Adaptive Majority Voting Demo

Adaptive algorithm

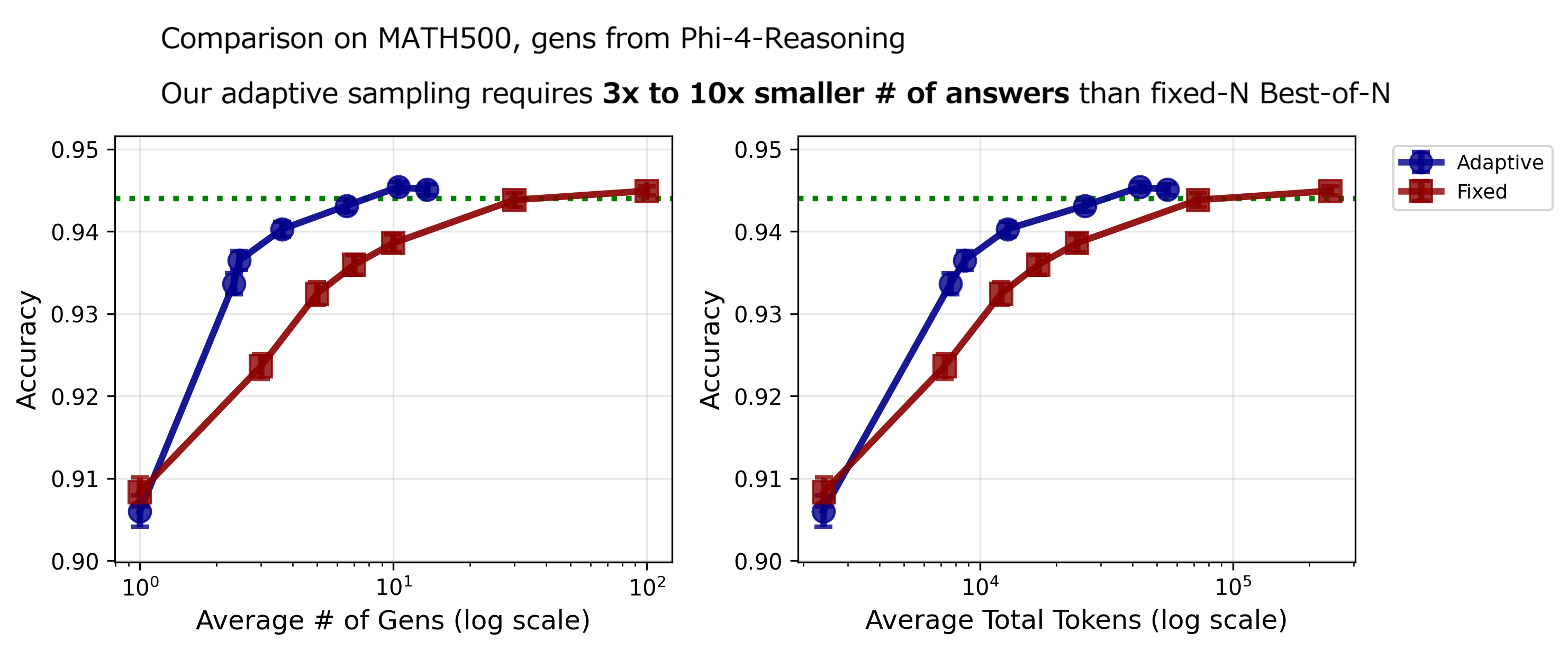

Adaptive sampling on MATH500

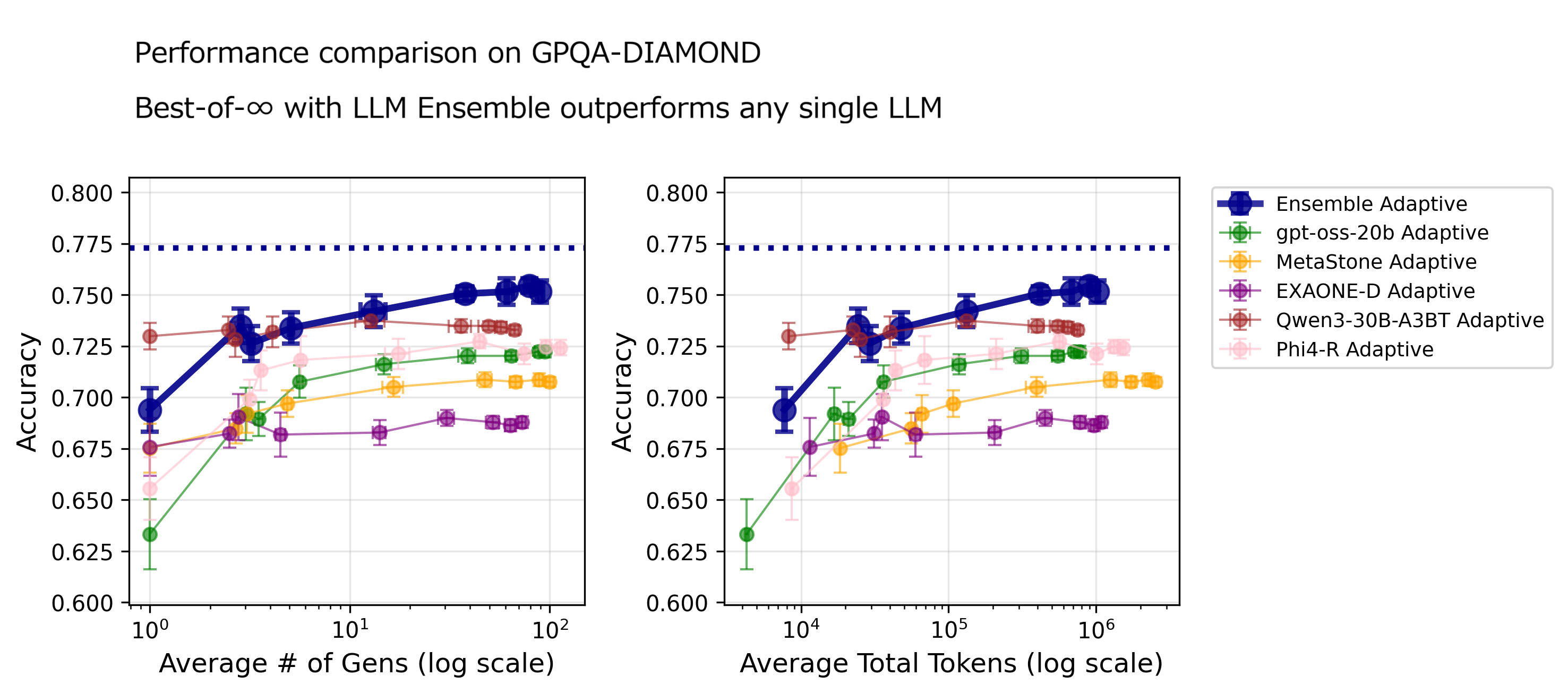

GPQA-Diamond results

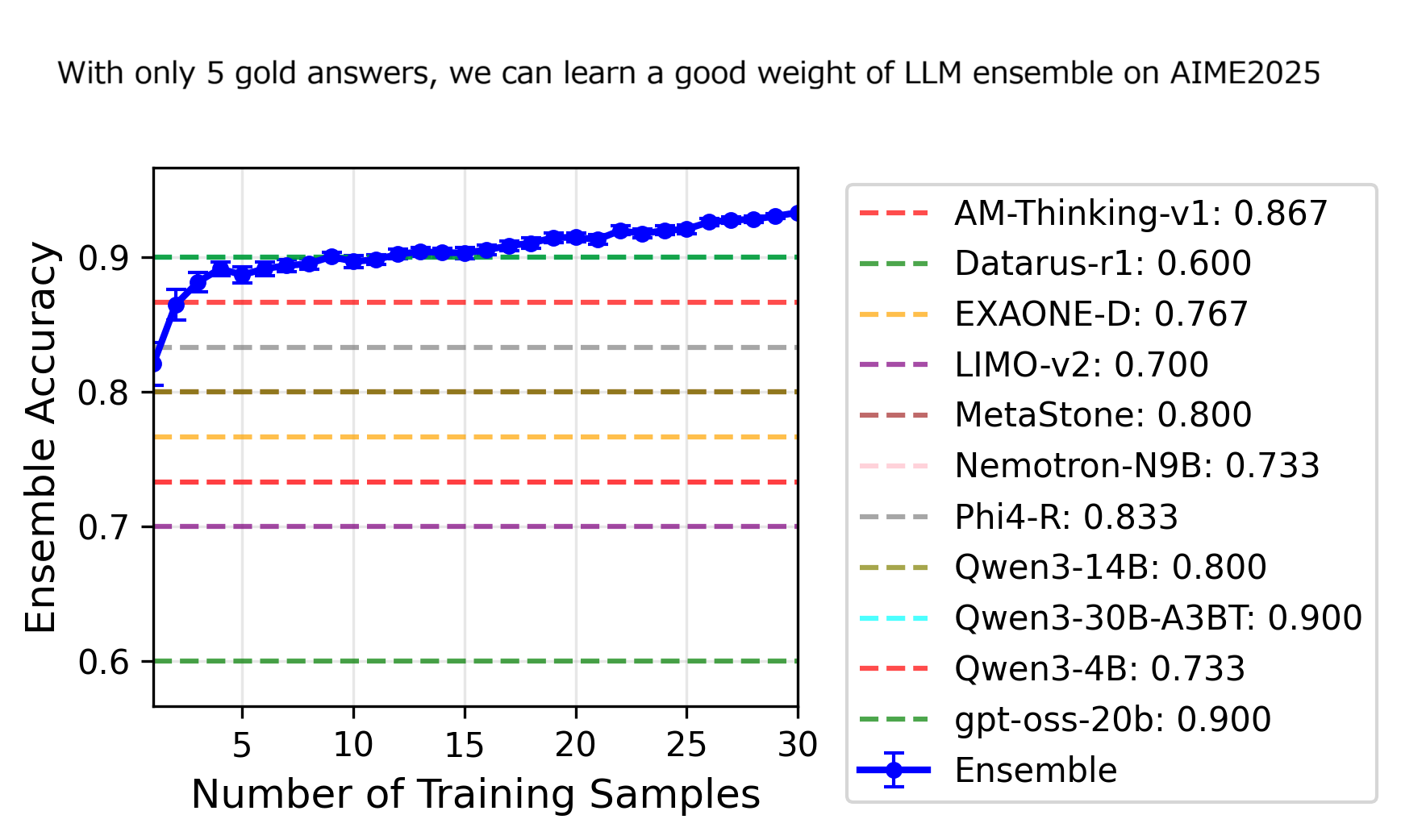

AIME2025 learning

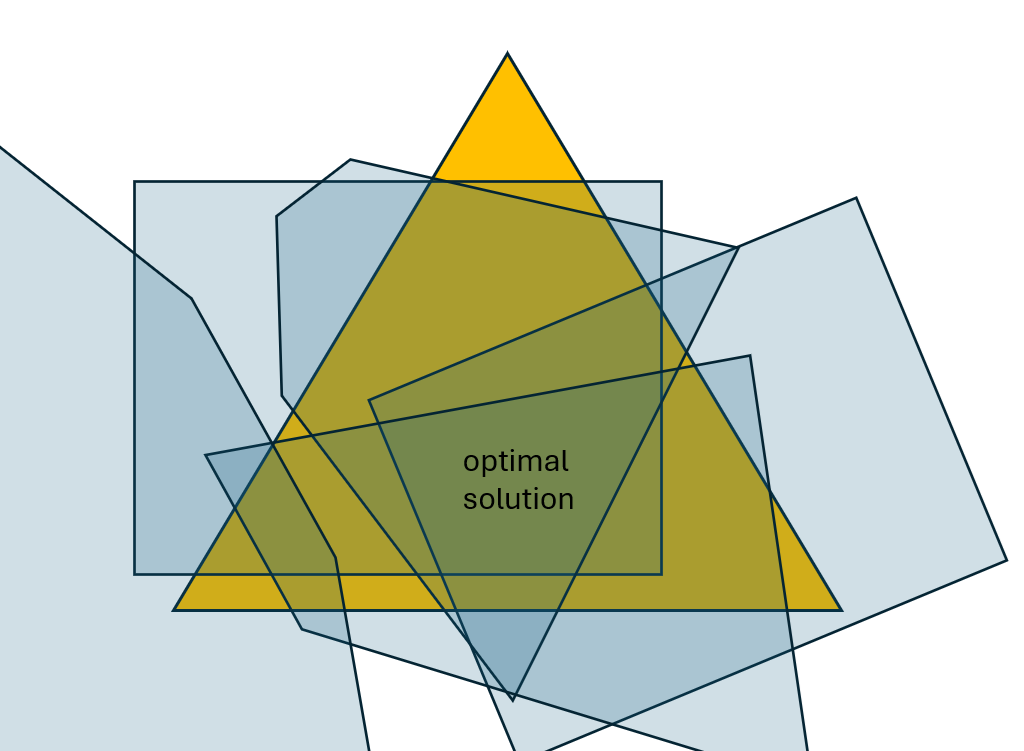

MILP Optimization for Best-of-∞

BibTeX

@misc{komiyama2025bestofinftyasymptoticperformance,

title = {Best-of-$\infty$ -- Asymptotic Performance of Test-Time Compute},

author = {Junpei Komiyama and Daisuke Oba and Masafumi Oyamada},

year = {2025},

eprint = {2509.21091},

archivePrefix = {arXiv},

primaryClass = {stat.ML},

url = {https://arxiv.org/abs/2509.21091}

}